Structuring An Unmoderated User Test

When you’re running an unmoderated user test, it’s important to structure the testing around answering one question or hypothesis you have that will impact one metric your assistant impacts.

Determine The Test Objective

Similar to how you would structure an A/B test on your live conversational experience, when you’re conducting an unmoderated user test on a high fidelity prototype before launch, you need to define a hypothesis for the test. What are you hoping to get a yes or no answer to? For example:

- Are users able to get responses about product or service information easily?

- If users are able to make a purchase through your conversational assistant, how many steps should it take?

- Do users understand the actions they can take within your assistant through context?

Write Screener Questions

Depending on the target audience for your product or service that your conversational assistant will be supporting, you’ll want a wide or narrow audience for your user testing. Using screener questions you can screen out or widen the potential audience for your testing by asking behavioral and demographic factors.

Make sure to keep your questions open-ended, asking questions that are too pointed could lead your potential audience to answer in anticipation of what you’re looking for.

Determine The Task To Test

Based on the test objective, you should be able to provide instructions to your users as well as the context of the tasks. That way your test participants can approach your experience with a similar mindset to your customers when your experience goes live.

Finalize The Test With A Survey

Just watching the recording back of the test participants going through your experience isn’t always enough information or feedback to take exact action items away from your user tests. Adding a survey at the end that asks open-ended questions about the experience can help you qualify the feedback and make appropriate changes.

Run Unmoderated User Testing With Voiceflow

13,241 Voiceflow prototypes have been shared since the product functionality was launched less than a year ago to help facilitate unmoderated user testing of chatbots, voice assistants, IVRs, in-car assistants, and more.

Running your own unmoderated user testing experience with Voiceflow Prototyping and Transcripts is easy to scale and allows you to host all of your user feedback in one place.

Let’s walk through how to set up an unmoderated user test using Voiceflow and Usertesting.com.

Setup Your Voiceflow Prototype

Before standing up your test in Usertesting.com, you’ll need to make sure your Voiceflow prototype is branded and shareable.

Depending on the kind of conversational experience you’re testing, you can choose to have the prototype use chat inputs or voice inputs.

You can also add your company’s HEX colors and logo to the prototype so it has the look and feel of your live experience.

Best Practices for Voice Assistants

Please note that if you are testing Voice experiences in Usertesting.com there are some key instructions you should add to your test so that your participants have a smoother experience:

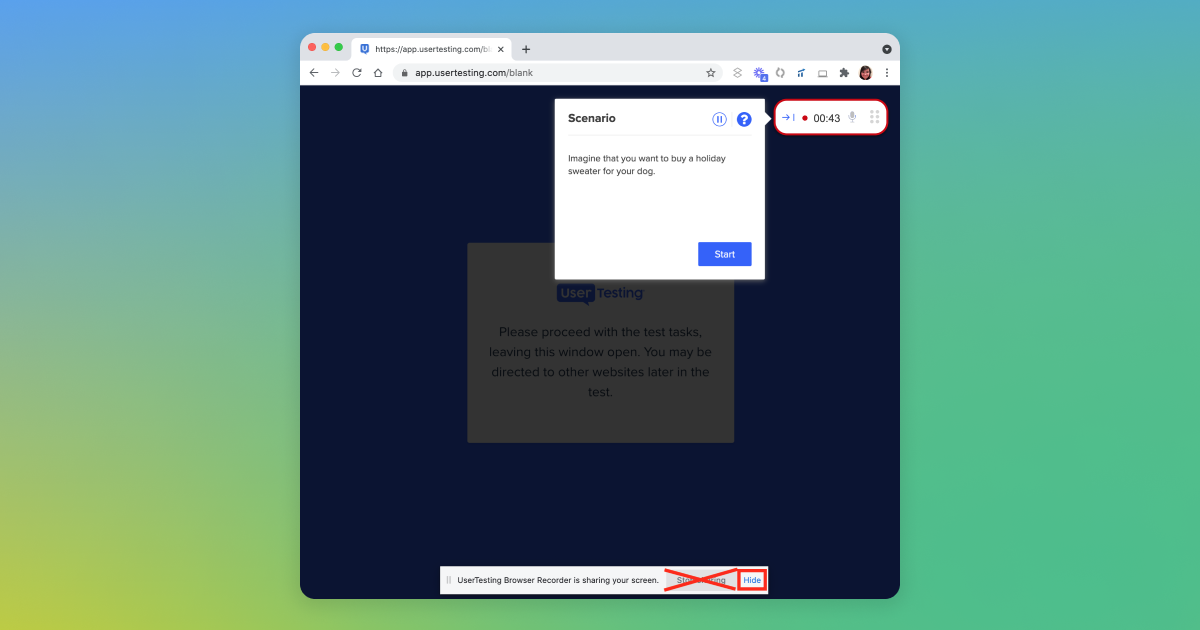

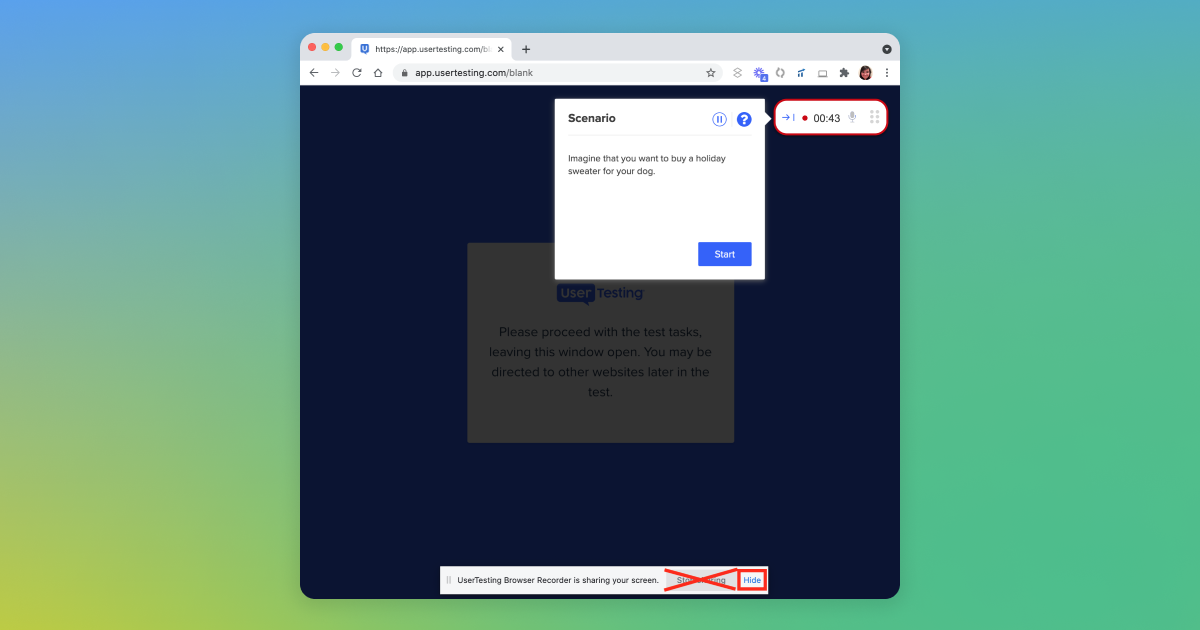

In UserTesting, participants will be recording their screens and their voice - helping them know the difference between the user testing voice settings and the Voiceflow voice settings can help create more seamless user testing.

The screen and voice recording settings are at the top right hand corner of your participants’ screens.

The screen sharing settings are at the bottom of their screens and you’ll want to ask them to “hide” those settings so that the Voiceflow settings are a bit more clear.

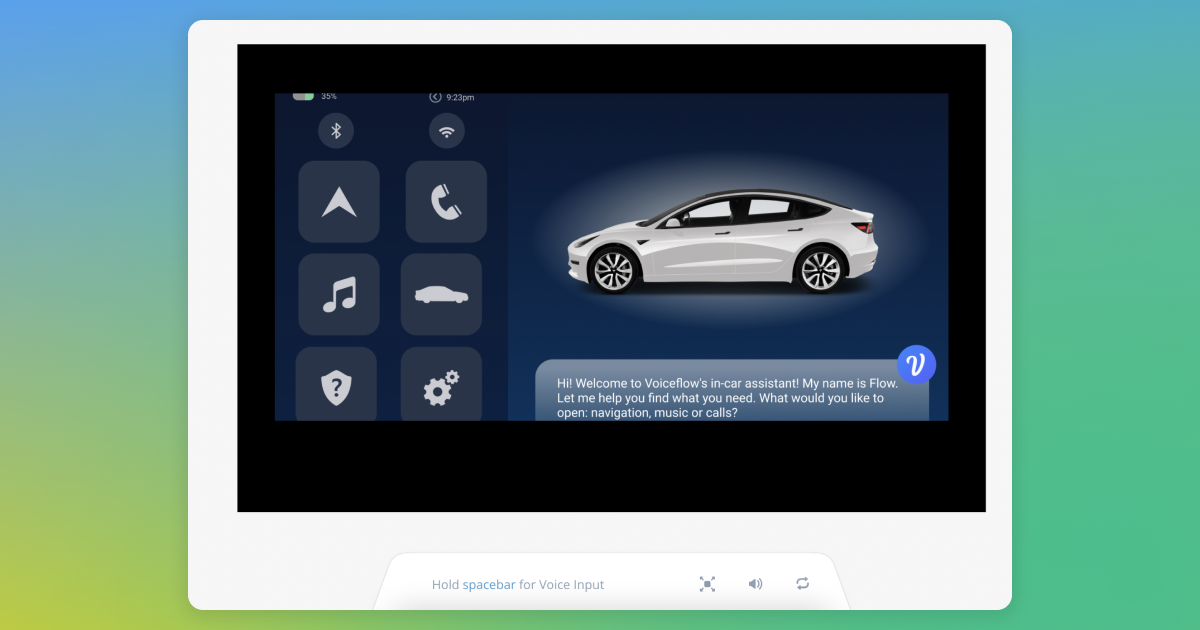

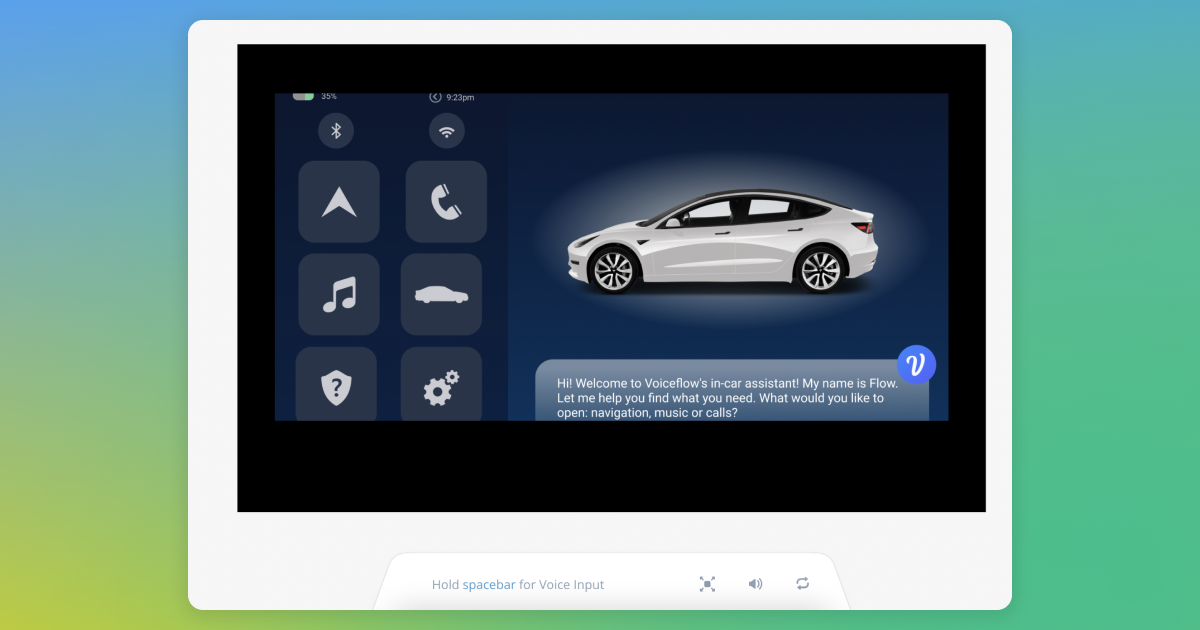

When you’re testing a voice experience in Voiceflow, the voice settings for the participant are on the bottom of the screen, using the spacebar to indicate that the user is ready to respond.

The Voiceflow prototype waits a few seconds after the spacebar is held to start recording, so asking your participants to wait a few seconds before they start speaking and after they start speaking before they let go of the spacebar will ensure that the Voiceflow prototype is able to pick up on the entire utterance spoken by your participants.

Setting Up Your Post-Experience Survey

You can always use another survey software after your experience is over to capture feedback, but that just adds another tab on your computer you need to keep open when you’re reviewing the results of your user tests.

Instead - you can incorporate a survey at the end of your Voiceflow conversation designs. Using a Flow, or reusable component, you can navigate your participants to an end-of-experience survey that’s already built into your conversation design. That way, when you’re reviewing the transcripts for all of your users, you have the transcripts for their conversations as well as their feedback all in one central place.

This also helps with the identification of your participants, because all of the people going through your tests are going to be unknown to your instance, trying to match their feedback with their transcript would be nearly impossible without some sort of user ID. This keeps everything centralized and easier to keep track of.

.png)

💡 If you’re testing an Alexa skill specifically, you can use their Beta Testing feature for prototyping and user testing so you don’t have to push your skill live during testing.

Unmoderated User Testing Helps Develop Better Conversational Experiences

User testing helps designers gain valuable insights into the outcomes of the conversations they’ve designed.

Within one week of using Voiceflow for IVR user testing, we scaled the number of tests run from 12 to 300 - all in 50% of the time."

Molly Sr. UX Designer, Home Depot

If your company doesn’t currently use UserTesting to conduct their user tests, you can always drop your prototype link in our community and ask your fellow conversation design peers for a hand! Join the conversation in the community today.

Structuring An Unmoderated User Test

When you’re running an unmoderated user test, it’s important to structure the testing around answering one question or hypothesis you have that will impact one metric your assistant impacts.

Determine The Test Objective

Similar to how you would structure an A/B test on your live conversational experience, when you’re conducting an unmoderated user test on a high fidelity prototype before launch, you need to define a hypothesis for the test. What are you hoping to get a yes or no answer to? For example:

- Are users able to get responses about product or service information easily?

- If users are able to make a purchase through your conversational assistant, how many steps should it take?

- Do users understand the actions they can take within your assistant through context?

Write Screener Questions

Depending on the target audience for your product or service that your conversational assistant will be supporting, you’ll want a wide or narrow audience for your user testing. Using screener questions you can screen out or widen the potential audience for your testing by asking behavioral and demographic factors.

Make sure to keep your questions open-ended, asking questions that are too pointed could lead your potential audience to answer in anticipation of what you’re looking for.

Determine The Task To Test

Based on the test objective, you should be able to provide instructions to your users as well as the context of the tasks. That way your test participants can approach your experience with a similar mindset to your customers when your experience goes live.

Finalize The Test With A Survey

Just watching the recording back of the test participants going through your experience isn’t always enough information or feedback to take exact action items away from your user tests. Adding a survey at the end that asks open-ended questions about the experience can help you qualify the feedback and make appropriate changes.

Run Unmoderated User Testing With Voiceflow

13,241 Voiceflow prototypes have been shared since the product functionality was launched less than a year ago to help facilitate unmoderated user testing of chatbots, voice assistants, IVRs, in-car assistants, and more.

Running your own unmoderated user testing experience with Voiceflow Prototyping and Transcripts is easy to scale and allows you to host all of your user feedback in one place.

Let’s walk through how to set up an unmoderated user test using Voiceflow and Usertesting.com.

Setup Your Voiceflow Prototype

Before standing up your test in Usertesting.com, you’ll need to make sure your Voiceflow prototype is branded and shareable.

Depending on the kind of conversational experience you’re testing, you can choose to have the prototype use chat inputs or voice inputs.

You can also add your company’s HEX colors and logo to the prototype so it has the look and feel of your live experience.

Best Practices for Voice Assistants

Please note that if you are testing Voice experiences in Usertesting.com there are some key instructions you should add to your test so that your participants have a smoother experience:

In UserTesting, participants will be recording their screens and their voice - helping them know the difference between the user testing voice settings and the Voiceflow voice settings can help create more seamless user testing.

The screen and voice recording settings are at the top right hand corner of your participants’ screens.

The screen sharing settings are at the bottom of their screens and you’ll want to ask them to “hide” those settings so that the Voiceflow settings are a bit more clear.

When you’re testing a voice experience in Voiceflow, the voice settings for the participant are on the bottom of the screen, using the spacebar to indicate that the user is ready to respond.

The Voiceflow prototype waits a few seconds after the spacebar is held to start recording, so asking your participants to wait a few seconds before they start speaking and after they start speaking before they let go of the spacebar will ensure that the Voiceflow prototype is able to pick up on the entire utterance spoken by your participants.

Setting Up Your Post-Experience Survey

You can always use another survey software after your experience is over to capture feedback, but that just adds another tab on your computer you need to keep open when you’re reviewing the results of your user tests.

Instead - you can incorporate a survey at the end of your Voiceflow conversation designs. Using a Flow, or reusable component, you can navigate your participants to an end-of-experience survey that’s already built into your conversation design. That way, when you’re reviewing the transcripts for all of your users, you have the transcripts for their conversations as well as their feedback all in one central place.

This also helps with the identification of your participants, because all of the people going through your tests are going to be unknown to your instance, trying to match their feedback with their transcript would be nearly impossible without some sort of user ID. This keeps everything centralized and easier to keep track of.

.png)

💡 If you’re testing an Alexa skill specifically, you can use their Beta Testing feature for prototyping and user testing so you don’t have to push your skill live during testing.

Unmoderated User Testing Helps Develop Better Conversational Experiences

User testing helps designers gain valuable insights into the outcomes of the conversations they’ve designed.

Within one week of using Voiceflow for IVR user testing, we scaled the number of tests run from 12 to 300 - all in 50% of the time."

Molly Sr. UX Designer, Home Depot

If your company doesn’t currently use UserTesting to conduct their user tests, you can always drop your prototype link in our community and ask your fellow conversation design peers for a hand! Join the conversation in the community today.

.svg)