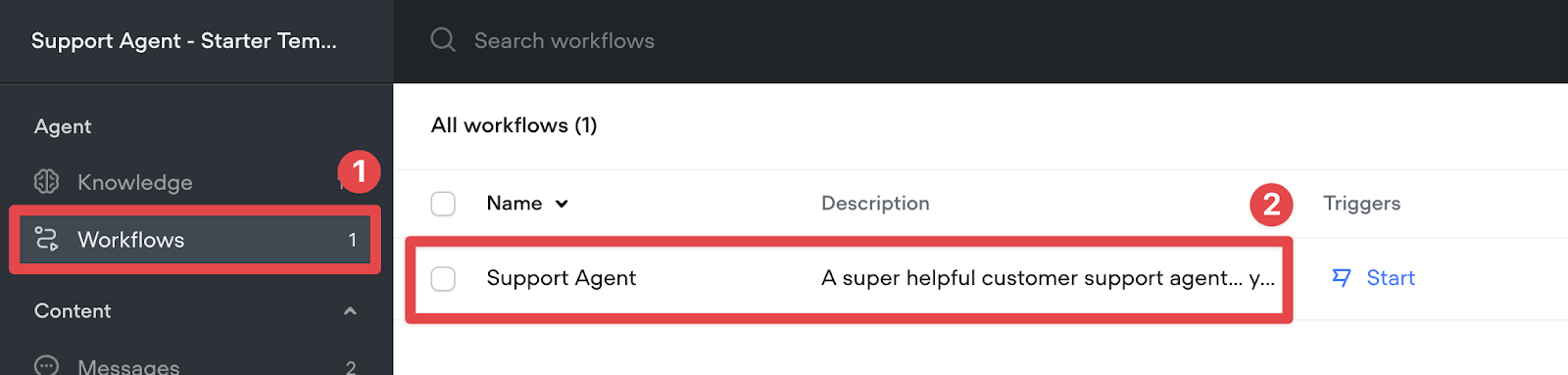

Now you’ve imported data into your agent’s Knowledge Base, it’s time to try querying it! To get started, open the Support Agent workflow.

Capturing the user’s question

First, we need to capture the user’s question. The easiest way to do this is using the capture step, with it set to capture the entire user reply. We’ll save the reply to the `last_utterance` variable.

We’ll also add a message step to prompt the user for their question.

Now we’ve captured the question, it’s time to lookup the answer!

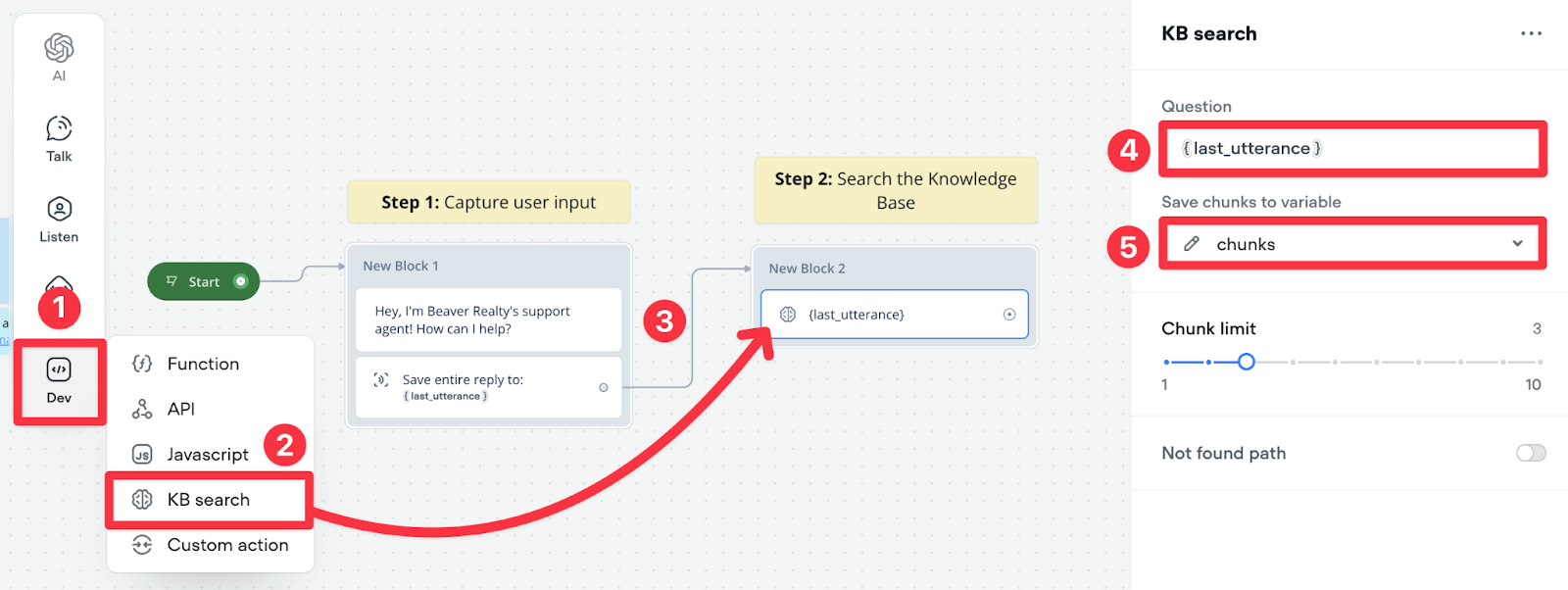

Querying the Knowledge Base

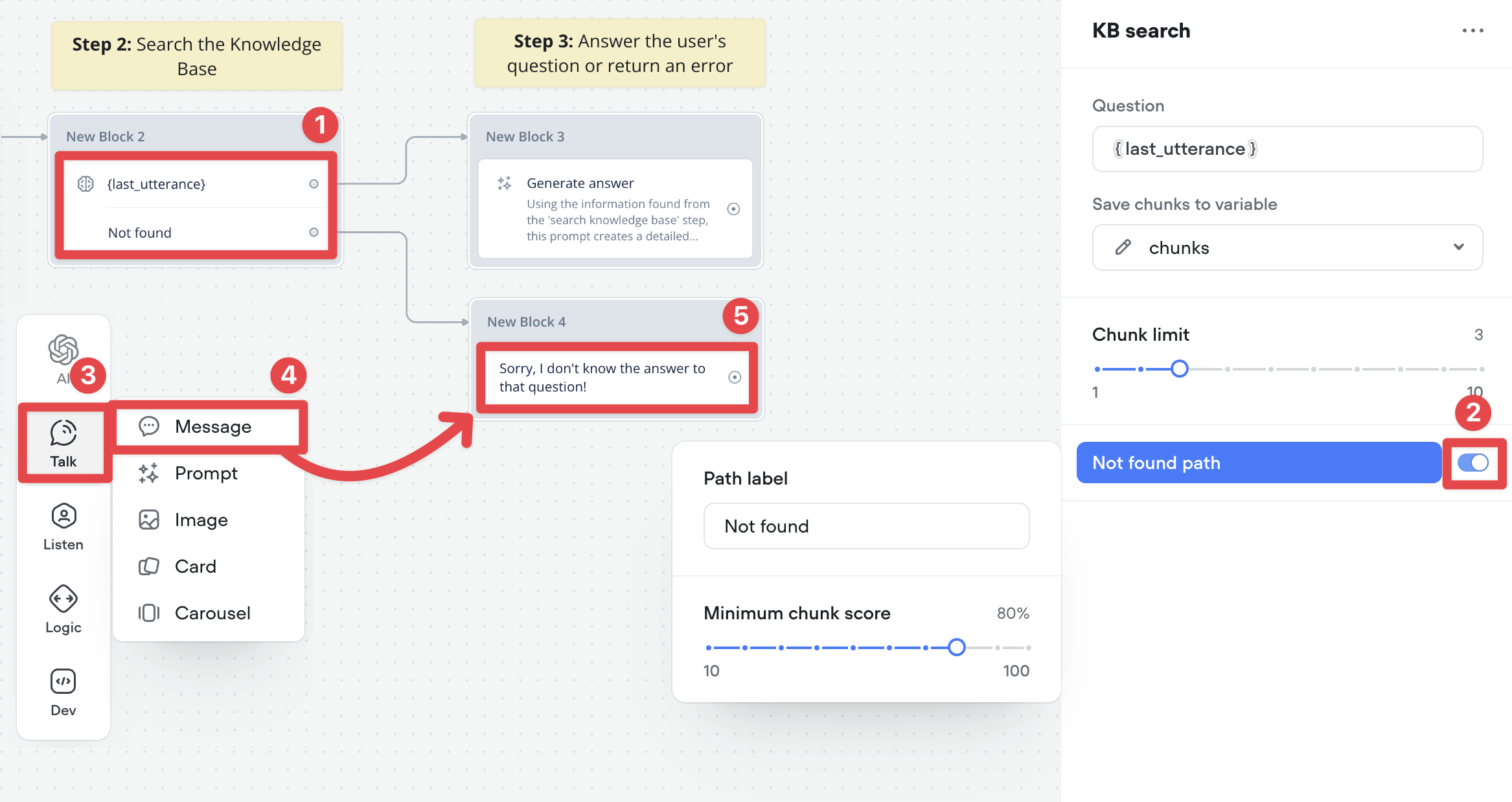

We can use the KB Search step to query our agent’s Knowledge Base. Add it to the canvas (it’s under the Dev menu in the sidebar), and connect it to the previous block. Then, set the Question to {last_utterance} and save chunks to the chunks variable.

Huh, what are chunks?

When we query our Knowledge Base, it doesn't return entire documents - it returns chunks of data. These are small pieces of information that have been split up from your original content to make searching more accurate.

Think of it like this: instead of looking through an entire book to find the answer to a question, the system finds and returns the most relevant paragraphs. These are the "chunks". Of course, in the real world, a chunk won't always be a paragraph.

The number of chunks returned depends on the chunk limit set in the KB Search step. In the example above, up to three chunks will be returned. That means, our agent will return up to three chunks that are most closely related to our user's query.

If you're technical, here's a great article that explains what's going on behind the scenes. If you're not, don't worry! Voiceflow will handle the difficult stuff.

Answering the user's question

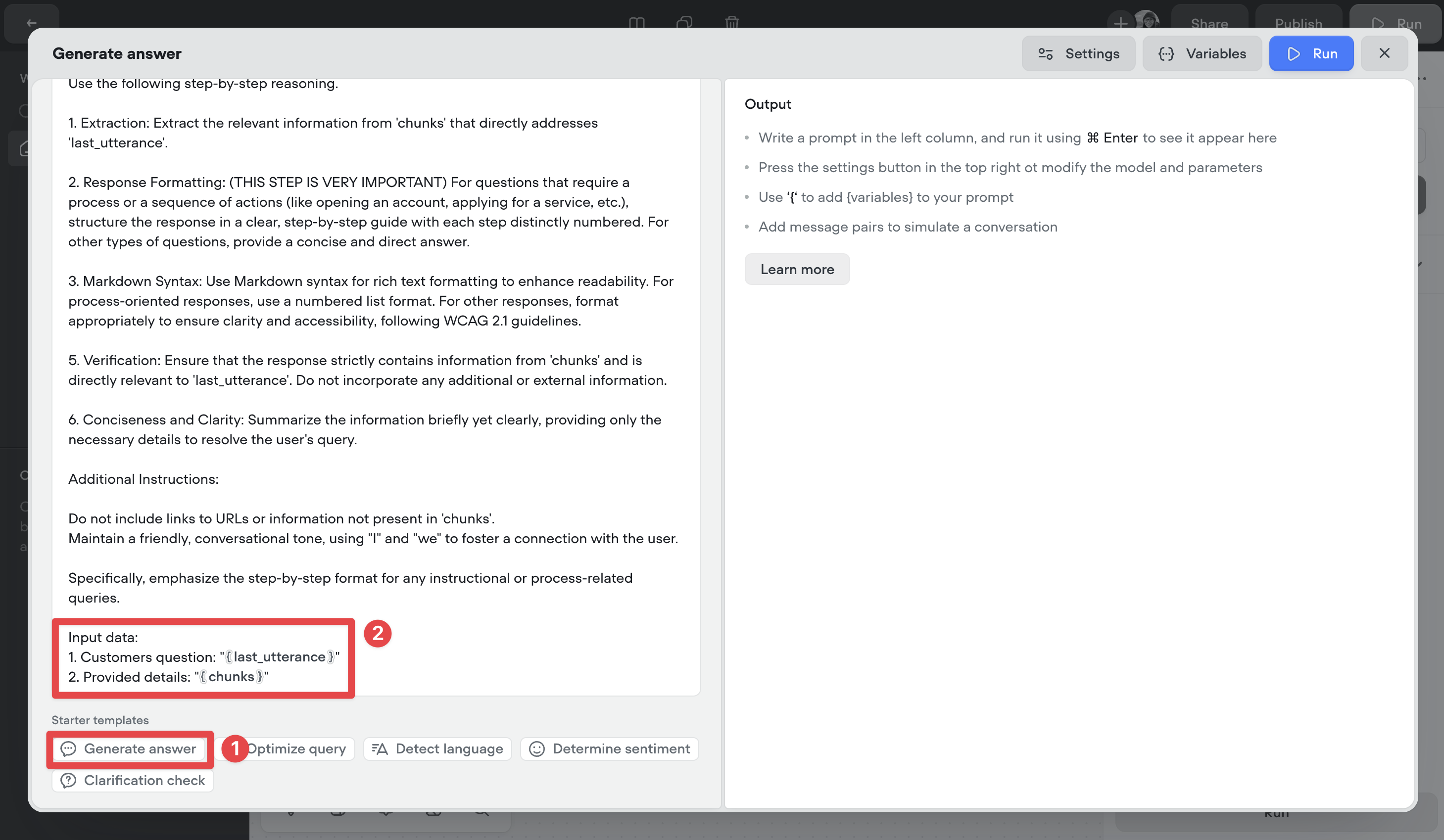

As the KB Search step returns raw chunks of information rather than a fully-written answer to the user's question, we should use the prompt step to write a human-understandable response to the query. Add one to your canvas, connect it to the previous step, then click New prompt.

To make life easy, we've made a Generate answer prompt template, so give it a click. You'll also want to make sure that the {last_utterance} and {chunks} variables are correctly linked, as shown in the screenshot below.

This prompt gives our agent specific instructions on how it should format answers, which information it should and shouldn't include, as well as the tone it should use. If you'd like, you can change this prompt to include extra instructions!

Handling not found answers

While our agent is pretty smart, it can't answer everything. For example, as it is an agent for a realtor, it's unlikely to be able to answer "how big is the largest ferret in the world?". To create a good user experience, we should make our agent tell the user if it can't answer their question.

Close the prompt window, and click on the KB Search step. Then, turn on the Not found path. This lets us connect to a step if our agent doesn't find the answer to a query.

Then, add a message step, connect it to the Not found path, and add an error message. In a real-world production-grade agent, you could do a different action here, for example, suggesting other topics the agent can answer, forwarding the user to the support team, or saving the failed query to an Airtable database.

Wait, what are minimum chunk scores?

In the screenshot above, you can see a minimum chunk score option. In short, this means "how closely related to the user's query does a chunk need to be in order to be returned"? In this example, it needs to be 80% related.

If you're finding that your agent is returning a bunch of irrelevant data, you might need to increase the minimum chunk score. Likewise, if you're searching for something that is in the Knowledge Base but its not being returned, you might need to decrease it.

Finishing off our agent

Great agents are like loops - once its finished with one query, it offers to help with another. Add a message step to the canvas that asks the user how else the agent can help. Connect it to the two previous steps, then the capture step.

Testing our agent

Our agent is now ready for testing! If you ask it a question that's unrelated to Toronto real-estate, it'll tell you that it can't answer that question. If you ask it about something in the Knowledge Base, then it'll answer your question! Remember, you can tweak the agent's response - including its length and tone - by modifying the Generate answer prompt.

Resources

Generate Answer Prompt

The generate answer prompt is available inside the Voiceflow Creator, but here it is for reference!

System prompt:

You are an AI customer support agent.

Conversation history:

{vf_memory}

User prompt:

You are an AI customer support bot helping a customer with their question. When communicating with guests, follow these guidelines:

Use the following step-by-step reasoning.

1. Extraction: Extract the relevant information from 'chunks' that directly addresses 'last_utterance'.

2. Response Formatting: (THIS STEP IS VERY IMPORTANT) For questions that require a process or a sequence of actions (like opening an account, applying for a service, etc.), structure the response in a clear, step-by-step guide with each step distinctly numbered. For other types of questions, provide a concise and direct answer.

3. Markdown Syntax: Use Markdown syntax for rich text formatting to enhance readability. For process-oriented responses, use a numbered list format. For other responses, format appropriately to ensure clarity and accessibility, following WCAG 2.1 guidelines.

5. Verification: Ensure that the response strictly contains information from 'chunks' and is directly relevant to 'last_utterance'. Do not incorporate any additional or external information.

6. Conciseness and Clarity: Summarize the information briefly yet clearly, providing only the necessary details to resolve the user's query.

Additional Instructions:

Do not include links to URLs or information not present in 'chunks'.

Maintain a friendly, conversational tone, using "I" and "we" to foster a connection with the user.

Specifically, emphasize the step-by-step format for any instructional or process-related queries.

Input data:

1. Customers question: "{last_utterance}"

2. Provided details: "{chunks}"Build AI Agents for customer support and beyond

Ready to explore how Voiceflow can help your team? Let’s talk.